With some imagination, Data Analytics can be used to aid decision making in almost any aspect of business.

Synopsis

We were invited by a Fortune 100 client to audit an implementation of Oracle EBS in a new market, whose Go-live was imminent. We analyzed the testing coverage, conversions, setups and configurations, transactions and error logs to find out the quality of the implementation and whether it was ready to Go-live. Among a large number of issues and discrepancies we found, we identified 5 critical areas in which the implementation could fail. All conclusions were data driven and irrefutable. We also provided strategies to recover the system if these area failed. True to our prediction, the 5 areas we identified had issues after go live but the client was able to prevent major issues due to being forewarned.

Background

A fortune 100 client was implementing Oracle EBS in a new and key market segment. They had been using EBS for their markets for a few years and wanted to expand the implementation to this new market. Towards the end of the implementation, and when the ‘go-live’ was in sight, we were invited to audit the implementation and provide inputs to take the ‘Go/No Go’ decision.

This document focuses only on the use of data and analytics for auditing the implementation. Other statistical and process analysis which were carried out are not included in this paper.

Discovery

We entered the scene during the integration testing phase when all the development and configuration has been completed. The first warning signs were that, despite the advanced stage of the project, the project team was extremely busy, and documentation was difficult to get.

However, we had access to the test environment for the last round of testing, the user testing environment and the existing production environment.

Audit Approach

We collected data from the system as well as people to start our analysis

- Project documents like Requirements, Design documents, Test Strategy and Plans and Traceability Matrix.

- Issue logs

- Interviews with key participants

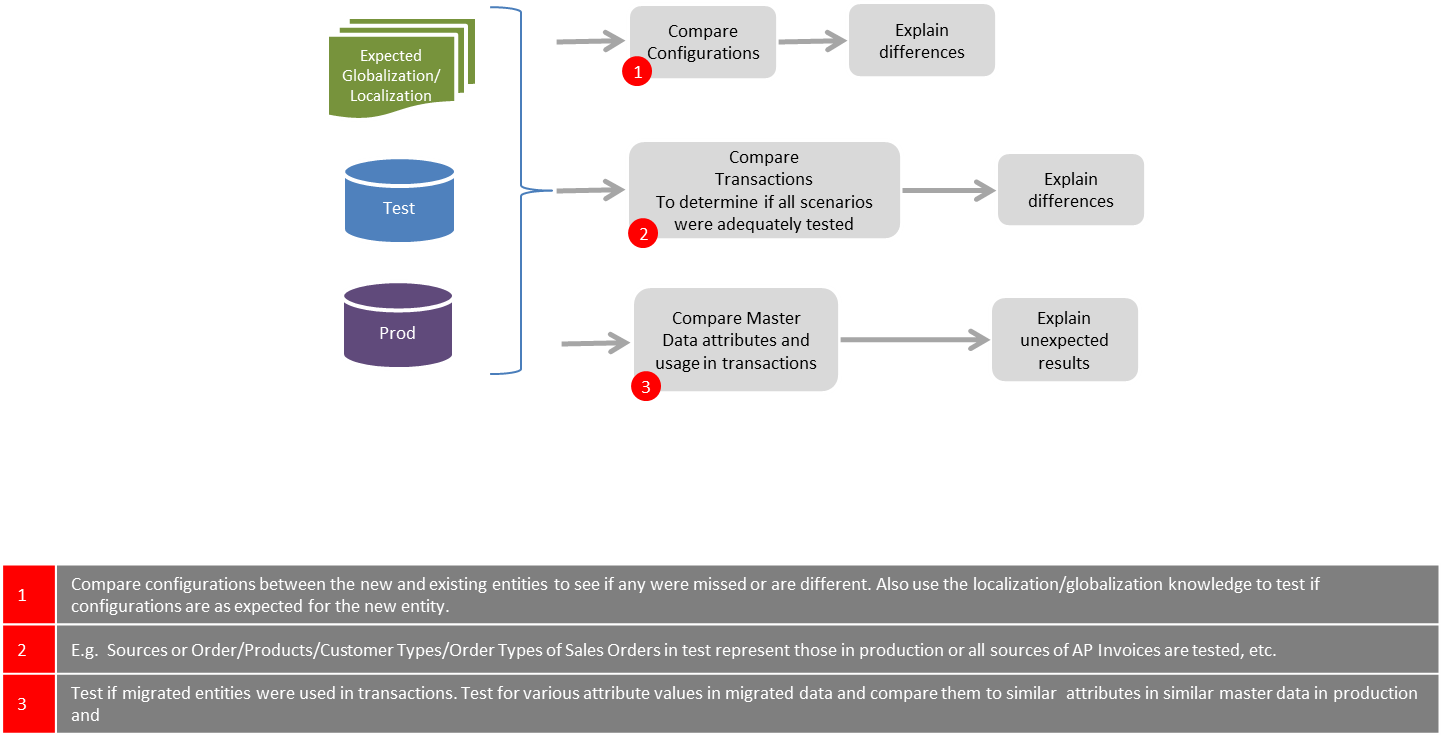

Configurations and Setups: The quality and comprehensiveness of the application configuration and setups were analyzed by comparing them with Global setups, localizations and setups required for specific business requirements. The setups in the testing environment was compared to the existing production environment and the expected configuration for market specific localizations and globalization.

Data migration: The quality of the data migrations was audited by comparing the percentage of successful conversions. We compared the converted data column by column to global and similar markets. We also checked how the master and transaction data performed in the end-to-end processes.

Extent of testing: We compared the test environment to production to evaluate if all significant production scenarios were adequately tested.

Transactions: We compared the end-to-end transactions in the testing environment to that in production to check the extent to which the test scenarios covered the various business processes. For example

- To assess the coverage of the testing scenarios, and if they were consistent with global business processes, we analyzed a large number of different parts, customers, order types, line types, vendors, etc. and their combinations, that were used in the testing environment, and compared them to what existed in production.

- We checked if the all the sources were being used for the various transactions like Orders, Customer Invoices, PO, AP Invoices etc.

- We collected data on number of transactions that ran successfully end to end. For example, if an EDI Sales Order could be successfully invoiced and collected. We found correlation between errors and types of transactions.

Information Proxies: Sources of the various transactions in a test environment can be used as a proxy for the extent and coverage of testing. Comparing these numbers to that of a comparable market, will show the extent of the testing coverage. For example

- Sources of posted Journal Entries show

- The number of upstream transactions that were completed.

- Comparing the ratio of sources of posted Journal Entries with a comparable existing market shows the ratio of scenarios tested (end-to-end)

- Sources of Sales Orders show the extent of manual quote conversions and EDI testing

- Sources of AP transactions show if all the interfaces were tested. i.e., Whether expenses, p-card, manually entered vendor invoices, self-service vendor invoices, intercompany, etc. were tested end-to-end.

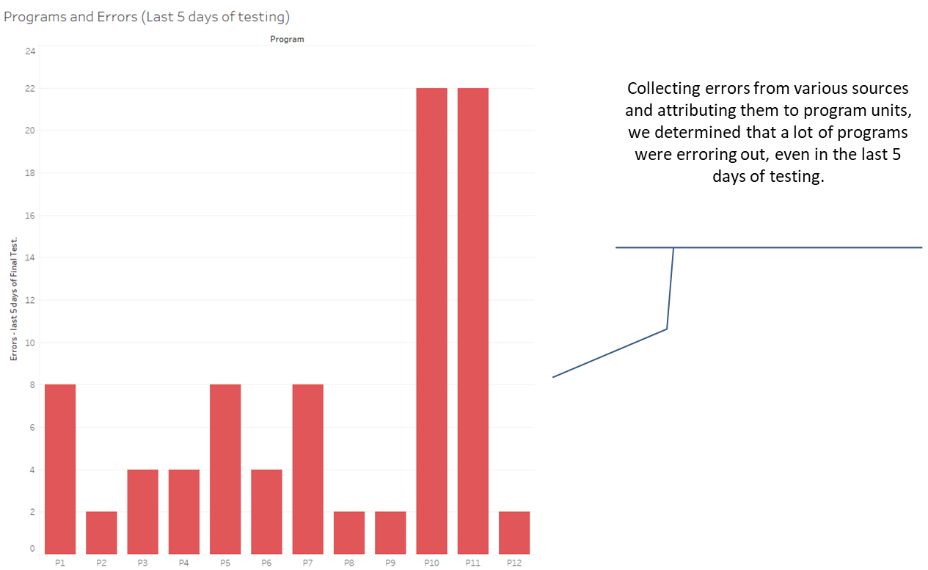

Errors: Concurrent program error logs and custom error logs were analyzed to understand the failures and possible root causes.

Controls: The following were some of the controls we analyzed

- Document and Code Review

- Issue logging and resolution

- Code versioning and promotion

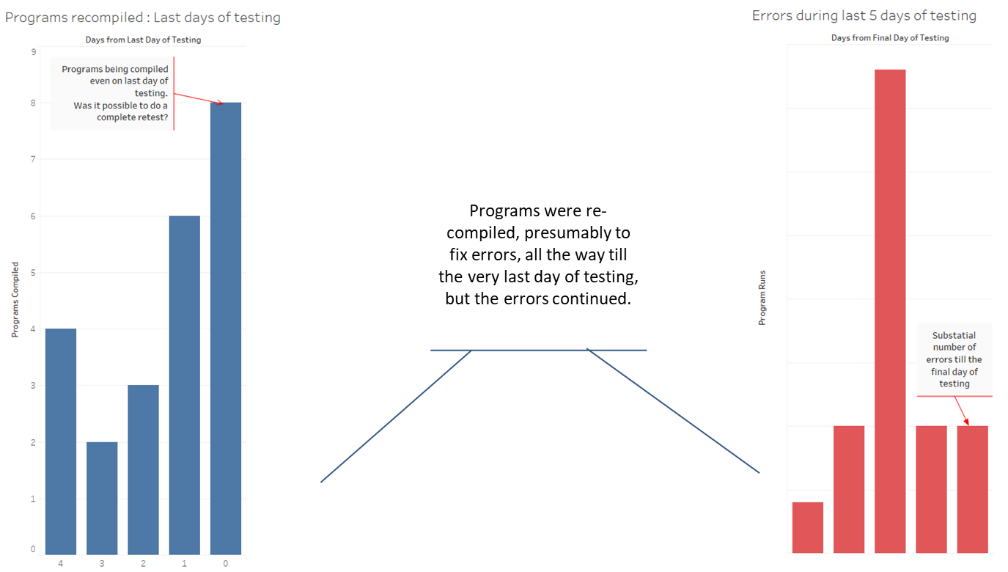

- How often, and till when in the test cycle, custom programs were modified and whether the changes were authorized.

Findings and Conclusions

We found and reported a large number of issues and discrepancies in the data, testing and setups. We also had provided recommendations for fixing some critical issues and for future implementations. The following pictures show two of the most compelling findings.

Relying on data, we were able to isolate 5 of the numerous programs for extensive deep dive. Since everything was backed with data, client found it easy to accept it as facts rather than just our opinion.

Using only the data collected from the implementations, we were able to identify 5 critical programs out of the large number of programs which would benefit from a deep dive. It was clear that process areas covered by these 5 programs/customizations were not ready to go live. We were able to highlight specific issues with these programs. Our recommendation was to go live but have backup manual processes ready to cover these 5 programs and to prepare a plan to work on stabilizing them.

Our conclusions were backed by hard data and hence were readily accepted by the client.

As it turned out, the go live was largely successful, however the 5 programs we had identified had to be redesigned and redeployed.

Be the first to write a comment.